- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

By Jeff Fan and Anish Singh Walia

How to Deploy DeepSeek R1 on DigitalOcean

This article compares three ways to deploy DeepSeek R1, a cost-effective large language model (LLM), on DigitalOcean. Each approach offers distinct trade-offs in setup complexity, security, fine-tuning, and system-level customization. By the end of this guide, you’ll know which method best matches your technical experience and project requirements.

Overview

DeepSeek R1 is a versatile LLM for text generation, Q&A, and chatbot development. On DigitalOcean, you can deploy DeepSeek R1 in one of three ways:

-

Approach A: GenAI Platform (Serverless)

A platform-based solution that minimizes DevOps overhead and delivers quick results but doesn’t support native fine-tuning. -

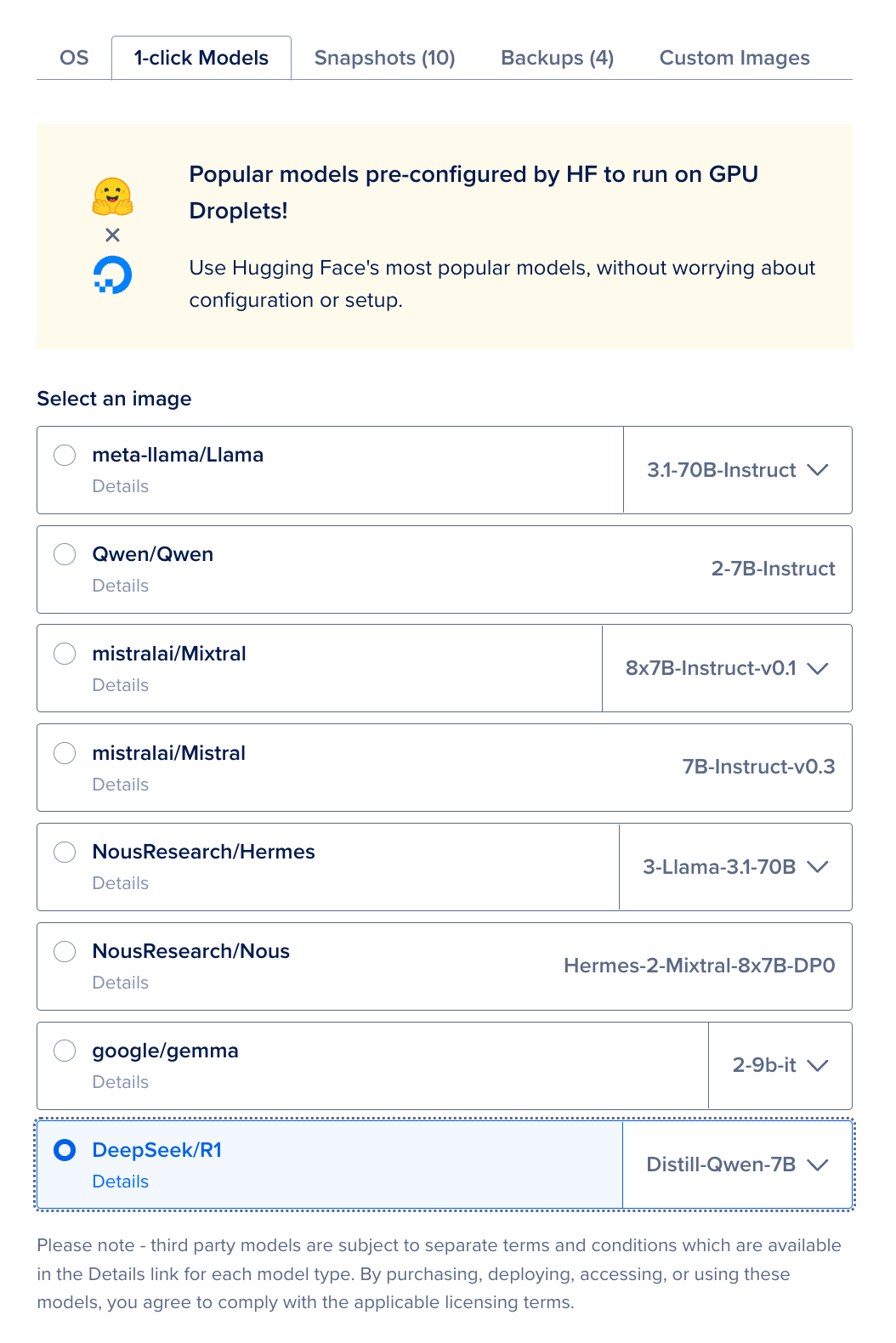

Approach B: DigitalOcean + Hugging Face Generative Service (IaaS)

Offers a prebuilt Docker environment and API token, supports multiple containers on a GPU Droplet, and allows training or fine-tuning. -

Approach C: Dedicated GPU Bare Metal + Ollama (IaaS)

It provides complete control over the OS, security configurations, and model fine-tuning but is more complex.

Using GenAI Platform (Serverless)

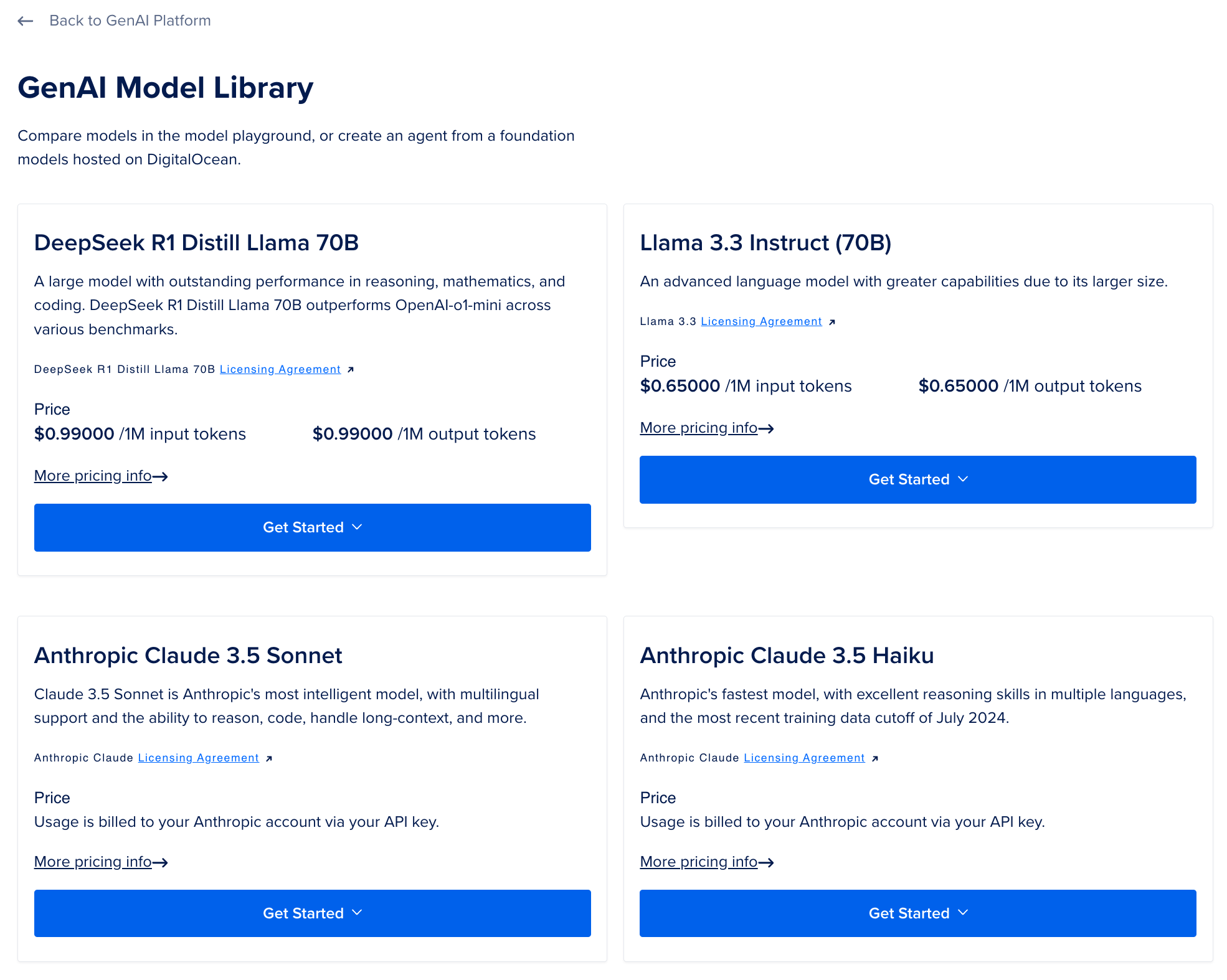

The DigitalOcean GenAI Platform uses a usage-based pricing model:

- Token-Based Billing: You pay for both input and output tokens (tracked per thousand tokens, displayed per million tokens).

- Open-Source Models: For instance, DeepSeek-R1-distill-llama-70B is $0.99 per million tokens. Lower-priced models start at $0.198 per million tokens.

- Commercial Models: If you bring your own API token (e.g., Anthropic), you follow the provider’s rates.

- Knowledge Bases: Additional fees for indexing tokens and vector storage.

- Guardrails: $3.00 per million tokens if you enable jailbreak or content moderation.

- Functions: Billed under DigitalOcean Functions pricing.

Playground Limit: The GenAI playground is free but limited to 10,000 tokens per day, per team (covering both input and output).

Using GPU Droplets

For Approach B (HUGS on IaaS) and Approach C (Ollama on IaaS), you’ll provision a GPU Droplet. Refer to Announcing GPU Droplets for current pricing. At publication:

- Starting at $2.99/GPU/hr on-demand (subject to change).

- Additional fees (like data egress or storage) may apply.

- You’re responsible for OS-level security, scaling, and patches.

Info: Deploy DeepSeek R1, the open-source advanced reasoning model that excels at text generation, summarization, and translation tasks. As one of the most computationally efficient open-source LLMs available, you’ll get high performance while keeping infrastructure costs low with DigitalOcean’s GPU Droplets.

Security and Maintenance

-

Approach A (GenAI Serverless)

- Security patches and maintenance are handled automatically.

- Optional guardrails or KBs incur token-based charges.

-

Approach B (HUGS on a GPU Droplet)

- You manage OS security, Docker environments, and firewall rules.

- The default token-based authentication is provided for the LLM endpoint.

-

Approach C (Ollama on a GPU Droplet)

- You take on the highest level of control and responsibility: OS security, firewall, usage monitoring.

- Ideal for compliance or custom configurations but requires more DevOps work.

-

Performance Benchmarks?

- No official speed metrics or SLAs are currently published. Performance depends on GPU size, data load, and specific workflows.

- If you need help optimizing performance, reach out to our solution architects. We may share future articles on benchmarking techniques.

Approach A: GenAI Platform (Serverless)

Use DigitalOcean’s GenAI Platform for a fully managed DeepSeek R1 deployment without provisioning GPU Droplets or handling OS tasks. Through a user-friendly UI, you can quickly create a chatbot, Q&A flow, or basic RAG setup.

When to Choose the GenAI Platform

- You have limited DevOps experience or prefer not to manage servers.

- You need a quick AI assistant (e.g., a WordPress plugin or FAQ bot).

- You don’t plan to train or fine-tune the model with private data.

Example Scenario: Chelsea’s Local Café Blog

Chelsea hosts a WordPress blog for her café, posting menu updates and community events. She’s comfortable with site hosting but not OS administration:

- She wants a chatbot to answer questions about open hours, menu specials, or local events.

- Guardrails can be added later if she faces problematic content.

- The GenAI Platform demands minimal server management, making it an easy choice.

Not Suitable When

- You need fine-tuning or domain-specific training.

- You must meet strict security needs (private networking, advanced OS rules).

- You plan to run multiple microservices or intensive tasks on a single server.

Approach B: DigitalOcean + Hugging Face Generative Service (HUGS)

This approach suits developers who want a GPU Droplet based solution with HUGS, offering partial sysadmin freedoms (multi-container) and a straightforward API token. It supports training or fine-tuning locally.

When to Choose GPU Droplets

- You want a quicker path to an AI endpoint than a fully manual approach.

- You aim to do some training or fine-tuning on the same GPU Droplet.

- You know Docker basics and don’t mind partial server administration.

Example Scenario: CHFB Labs

CHFB Labs builds fast Proofs of Concept for clients:

- Some clients need domain-specific training or partial fine-tuning.

- Extra Docker containers (e.g., staging or logging) can run on the same Droplet.

- A default access token is included, avoiding custom auth code.

Not Suggested When

- You want a serverless approach (Approach A is simpler).

- You require advanced OS-level tweaks (e.g., kernel modules).

- You prefer a completely manual pipeline with zero pre-configuration.

Approach C: GPU Droplets + Ollama

Use Approach C when you need full control over your GPU Droplet. You can configure OS security, implement custom domain training, and create your own endpoints, albeit with higher DevOps demands.

When to Choose GPU Droplets + Ollama

- You want to manage the OS, Docker, or orchestrators manually.

- You need strict compliance or advanced custom rules (firewalls, specialized networking).

- You plan to fine-tune DeepSeek R1 or run large-scale tasks.

Example Scenario: Mosaic Solutions

Mosaic Solutions provides enterprise analytics:

- They store sensitive data and require encryption or specialized tooling.

- They install Ollama directly to manage exposure of DeepSeek R1.

- They handle OS monitoring, usage logs, and custom performance tuning.

Not Ideal If

- You dislike DevOps tasks or can’t manage OS-level security.

- You want a single-click or minimal-effort deployment.

- You only need a small chatbot with limited usage.

Comparison

Use the table below to compare the three methods:

| Category | Approach A (GenAI Serverless) | Approach B (DO + HUGS, IaaS) | Approach C (GPU + Ollama, IaaS) |

|---|---|---|---|

| SysAdmin Knowledge | Minimal — fully managed UI, no server config | Medium — Docker-based GPU Droplet, partial sysadmin | High — full OS & GPU management, custom security, etc. |

| Flexibility | Medium — built-in RAG, no fine-tuning | High — multi-container usage, optional training/fine-tuning on GPU | High — custom OS, advanced security, domain-specific fine-tuning |

| Setup Complexity | Low — no Droplet provisioning | Medium — create GPU Droplet, launch HUGS container, handle Docker | High — manual environment config, security, scaling |

| Security / API | Managed guardrails, limited endpoint exposure | Token-based by default; can run more services on the same Droplet if needed | DIY — create auth keys, firewall rules, usage monitoring |

| Fine-Tuning | No | Yes — integrated via training scripts | Yes — fully controlled environment for domain training |

| Best For | Non-technical users, quick AI setups, zero DevOps overhead | Teams needing quick PoCs, multi-app on GPU Droplet, partial training | DevOps-savvy teams, specialized tasks, compliance, domain-specific solutions |

| Not Ideal If… | You need fine-tuning or OS-level custom, want multi-LLM | You want a fully serverless approach or advanced OS modifications | You want a quick setup, have no DevOps staff, only need a small chatbot |

Conclusion

Your choice of deployment method depends on how much control you need, whether you want fine-tuning, and how comfortable you are with GPU resource management:

-

Approach A (GenAI Serverless)

- Easiest to begin, no GPU Droplet required.

- Limited customization, no fine-tuning.

- Ideal for a basic chatbot or Q&A (like a WordPress plugin).

-

Approach B (DigitalOcean + HUGS, IaaS)

- Moderate complexity, a prebuilt Docker environment on a GPU Droplet.

- Partial sysadmin for multiple services, supports local fine-tuning.

- A balanced option between convenience and flexibility.

-

Approach C (GPU + Ollama, IaaS)

- Highest control: OS-level security, large-scale tasks, advanced training.

- Suitable for compliance or specialized pipelines.

- Demands significant DevOps expertise.

Next Steps

- You can check out this tutorial on Deploying a Chatbot with GenAI Platform to set up a chatbot using the GenAI Platform in minutes.

- Refer to the Deploying Hugging Face Generative AI Services on DigitalOcean GPU Droplet to spin up a GPU Droplet with HUGS, secure it with a token, and integrate training or fine-tuning.

- You can also refer to this tutorial on Run LLMs with Ollama on H100 GPUs for Maximum Efficiency to learn about complete GPU Droplet control via Ollama, creating custom inference endpoints, and handling domain-specific training.

Happy deploying and fine-tuning!

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author(s)

I’m a Senior Solutions Architect in Munich with a background in DevOps, Cloud, Kubernetes and GenAI. I help bridge the gap for those new to the cloud and build lasting relationships. Curious about cloud or SaaS? Let’s connect over a virtual coffee! ☕

I help Businesses scale with AI x SEO x (authentic) Content that revives traffic and keeps leads flowing | 3,000,000+ Average monthly readers on Medium | Sr Technical Writer @ DigitalOcean | Ex-Cloud Consultant @ AMEX | Ex-Site Reliability Engineer(DevOps)@Nutanix

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- How to Deploy DeepSeek R1 on DigitalOcean

- Overview

- Using GenAI Platform (Serverless)

- Using GPU Droplets

- Security and Maintenance

- Approach A: GenAI Platform (Serverless)

- Approach B: DigitalOcean + Hugging Face Generative Service (HUGS)

- Approach C: GPU Droplets + Ollama

- Comparison

- Conclusion

Jump in! Follow our easy steps to deploy and bring your AI SaaS venture to life. DeepSeek on DigitalOcean

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and SMBs

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.