- Log in to:

- Community

- DigitalOcean

- Sign up for:

- Community

- DigitalOcean

Technical Writer

Introduction

Image Super-Resolution (ISR) is the process of improving the quality and resolution of a low-resolution (LR) image to a high-resolution (HR) version. This technique enhances finer details, sharpness, and clarity, making it highly valuable in various fields. ISR is widely used in medical imaging to improve the accuracy of diagnoses, in satellite imaging to extract finer geographical details, and in security applications for enhancing surveillance footage. It also plays a crucial role in digital media, helping upscale old or low-quality images and videos while maintaining visual fidelity. With advancements in deep learning and neural networks, modern ISR methods, such as convolutional neural networks (CNNs) and generative adversarial networks (GANs), have significantly improved the effectiveness of this technology, making it indispensable in both academic research and industrial applications. Here are a few of the use cases of ISR discussed in detail:

- Surveillance: To detect, identify, and perform facial recognition on low-resolution images obtained from security cameras.

- Medical: Capturing high-resolution MRI images can be tricky due to scan time, spatial coverage, and signal-to-noise ratio (SNR). Super-resolution helps resolve this by generating high-resolution MRI from low-resolution MRI images.

- Media: Super-resolution can help reduce server costs by allowing media to be transmitted at a lower resolution and upscaled in real time. Deep learning techniques have proven effective in addressing the challenges of image and video super-resolution. This article will explore the underlying theory, various techniques utilized, loss functions, metrics, and the relevant datasets involved.

Key takeaways:

- Image super-resolution is the task of producing a high-resolution image from a low-resolution input, and deep learning has transformed this field by training models that can infer and generate plausible high-frequency details, rather than just smoothing or interpolating the image as traditional methods do.

- Early deep learning models for super-resolution (such as the SRCNN) showed that a simple convolutional neural network could already outperform classic upscaling techniques, and newer architectures—including GAN-based approaches like SRGAN/ESRGAN and even transformer-based models—have further improved results by generating sharper, more realistic details.

- Super-resolution has many practical applications—from enhancing medical and satellite images, to sharpening security camera footage and old photographs, to upscaling video content for higher-quality streaming—making it a valuable tool whenever there’s a need to improve image detail and clarity.

Prerequisites

Before diving into this review on image super-resolution, we should have a basic understanding of the following concepts:

- Digital Image Processing: Familiarity with fundamental techniques, such as image filtering, sampling, and interpolation.

- Machine Learning Basics: Understanding core concepts, including supervised learning, loss functions, and model evaluation metrics.

- Deep Learning Fundamentals: Knowledge of neural networks, particularly convolutional neural networks (CNNs), and their applications in computer vision tasks.

- Mathematics for AI: A grasp of linear algebra, calculus, and probability, especially regarding optimization and statistical methods used in model training.

- Programming Skills: Experience with Python and popular deep learning libraries like TensorFlow or PyTorch for practical implementations.

Image Super-Resolution

Low resolution images can be modeled from high resolution images using the below formula, where D is the degradation function, Iy is the high resolution image, Ix is the low resolution image, and 𝜎 is the noise.

.png)

Where:

- Ix is the low-resolution image,

- Iy is the high-resolution image,

- D is the degradation function, which can include operations like blurring, downsampling, and compression,

- 𝜎 represents noise introduced during the degradation process.

The degradation parameters D and 𝜎 are unknown; only the high resolution image and the corresponding low resolution image are provided. The task of the neural network is to find the inverse function of degradation using just the HR and LR image data.

Super-Resolution Methods and Techniques

There are many methods used to solve this task. We will cover the following:

- Pre-Upsampling Super Resolution

- Post-Upsampling Super Resolution

- Residual Networks

- Multi-Stage Residual Networks

- Recursive Networks

- Progressive Reconstruction Networks

- Multi-Branch Networks

- Attention-Based Networks

- Generative Models

We’ll look at several example algorithms for each.

Pre-Upsampling Super Resolution

The methods under this bracket use traditional techniques–like bicubic interpolation and deep learning–to refine an upsampled image. The most popular method, SRCNN, was also the first to use deep learning and has achieved impressive results.

SRCNN

SRCNN is a simple CNN architecture consisting of three layers: one for patch extraction, non-linear mapping, and reconstruction. The patch extraction layer is used to extract dense patches from the input and represent them using convolutional filters. The non-linear mapping layer consists of 1×1 convolutional filters used to change the number of channels and add non-linearity. As you might have guessed, the final reconstruction layer reconstructs the high-resolution image.

The MSE loss function trains the network, and PSNR (discussed below in the Metrics section) evaluates the results. We will discuss both of these in more detail later.

VDSR

Very Deep Super Resolution (VDSR) is an improvement on SRCNN, with the addition of the following features:

- As the name signifies, a deep network with small 3×3 convolutional filters is used instead of a smaller network with large convolutional filters. This is based on the VGG architecture.

- The network tries to learn the residual of the output image and the interpolated input rather than learning the direct mapping (like SRCNN), as shown in the figure above. This simplifies the task. The initial low-resolution image is added to the network output to get the final HR output.

- Gradient clipping is used to train the deep network with higher learning rates.

Post-Upsampling Super-Resolution

Since the feature extraction process in pre-upsampling SR occurs in the high-resolution space, the computational power required is also on the higher end. Post-upsampling SR tries to solve this by doing feature extraction in the lower resolution space, then doing upsampling only at the end, therefore significantly reducing computation. Also, instead of using simple bicubic interpolation for upsampling, a learned upsampling in deconvolution/sub-pixel convolution is used, thus making the network trainable end-to-end.

Let’s discuss a few popular techniques following this structure.

FSRCNN

As can be seen in the above figure, the major changes between SRCNN and FSRCNN are:

- There was no pre-processing or upsampling at the beginning. The feature extraction took place in the low-resolution space.

- A 1×1 convolution is used after the initial 5×5 convolution to reduce the number of channels and hence lesser computation and memory, similar to how the Inception network is developed.

- Multiple 3×3 convolutions are used instead of having a big convolutional filter, similar to how the VGG network works by simplifying the architecture to reduce the number of parameters.

- Upsampling is done using a learned deconvolutional filter, thus improving the model.

FSRCNN ultimately achieves better results than SRCNN while also being faster.

ESPCN

ESPCN introduces the concept of sub-pixel convolution to replace the deconvolutional layer for upsampling. This solves two problems associated with it:

- Deconvolution happens in the high resolution space, and thus is more computationally expensive.

- It resolves the checkerboard issue in deconvolution, which occurs due to the overlap operation of convolution (shown below).

The figure below shows that sub-pixel convolution works by converting depth to space. Pixels from multiple channels in a low-resolution image are rearranged into a single high-resolution image channel. For example, an input image of size 5×5×4 can rearrange the pixels in the final four channels to a single channel, resulting in a 10×10 HR image.

Let’s now discuss a few more architectures which are based on the techniques from the figure below.

Residual Networks

EDSR

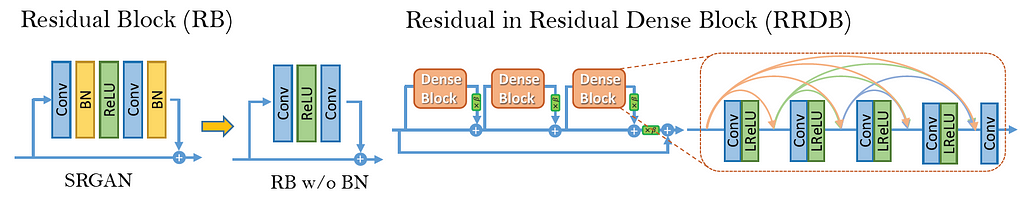

The EDSR architecture is based on the SRResNet architecture, consisting of multiple residual blocks. The residual block in EDSR is shown above. The major difference from SRResNet is that the Batch Normalization layers are removed. The author states that BN normalizes the input, thus limiting the range of the network; removal of BN results in an improvement in accuracy. The BN layers consume memory, and removing them leads to up to a 40% memory reduction, making the network training more efficient.

MDSR

MDSR is an extension of EDSR, featuring multiple input and output modules that provide corresponding resolution outputs at 2x, 3x, and 4x. The pre-processing modules for scale-specific inputs start with two residual blocks that utilize 5×5 kernels. A larger kernel is employed in the pre-processing layers to maintain a shallow network while ensuring a high receptive field.

At the end of the scale-specific pre-processing modules are shared residual blocks, which serve as a common block for data across all resolutions. Following these shared, residual blocks are the scale-specific upsampling modules. Although the overall depth of MDSR is 5 times greater than that of single-scale EDSR, the number of parameters is only 2.5 times greater, rather than 5 times, due to the shared parameters. MDSR achieves results comparable to those of scale-specific EDSR, even with fewer parameters than the combined scale-specific EDSR models.

CARN

In the paper Fast, Accurate, and Lightweight Super-Resolution with Cascading Residual Network, the authors have proposed the following advancements on top of a traditional residual network:

- A cascading mechanism at both the local and global level, to incorporate features from multiple layers and allow the network to receive more information.

- In addition to CARN, a smaller CARN-M is proposed to have a lighter architecture without much deterioration in results, using recursive network architecture.

The global connections in CARN are visualized above. The culmination of each cascading block with a 1×1 convolution receives inputs from all the previous cascading blocks and the initial input, thus resulting in an effective transfer of information.

Every residual block in a cascading block ends in a 1x1 convolution with connections from all previous residual blocks along with the main input, similar to how global cascading works.

The residual block in ResNet is replaced by a newly designed Residual-E block inspired by depthwise convolutions in MobileNet. Instead of depthwise convolutions, group convolutions are used, and the results show a decrease in 1.8-14x the number of computations used, depending on the group size.

To further reduce the number of parameters, a shared residual block (recursive block) is used, resulting in a reduced number of parameters by up to three times the original number. As seen in (d) above, a recursive shared block helps reduce the total number of parameters.

Multi-Stage Residual Networks

A multi-stage design is considered in a few architectures to improve their performance by dealing with feature extraction separately in the low-resolution and high-resolution space. The first stage predicts the coarse features, while the later stage improves on them. Let’s discuss an architecture involving one of these multi-stage networks.

BTSRN

The figure above illustrates the structure of the BTSRN, which is composed of two stages: a low-resolution (LR) stage and a high-resolution (HR) stage. The LR stage includes six residual blocks, while the HR stage consists of four blocks. Convolution operations in the HR stage require more computational power due to the larger input size. The number of blocks in both stages is strategically chosen to strike a balance between accuracy and performance.

The output of the LR stage is upsampled before being sent to the HR stage. This is done by adding the outputs of the Deconvolution layer and Nearest Neighbor upsampling.

The authors propose a novel residual block named PConv, as seen in (d) in the figure above. Based on the results, the proposed block achieves a good trade-off between accuracy and performance.

Similar to EDSR, Batch Normalization is avoided to prevent re-centering and re-scaling, which is found to be detrimental. This is because super-resolution is a regression task, and thus, target outputs are highly correlated with inputs’ first-order statistics.

Recursive Networks

Recursive networks employ shared network parameters in convolutional layers to reduce their memory footprint, as seen in CARN-M above. Let’s discuss a few more architectures involving recursive units.

DRCN

Deep Recursive Convolutional Network (DRCN) involves applying the same convolution layer multiple times. As can be seen in the figure above, the convolutional layers in the residual block are shared.

The outputs from all the intermediate shared convolutional blocks and the input are sent to the reconstruction layer, which generates the high-resolution image using all of the inputs. Since multiple inputs are used to generate the output, this architecture can be considered an ensemble of networks.

DRRN

Deep Recursive Residual Network (DRRN) is an improvement over DRCN by having residual blocks in the network over simple convolutional layers. The parameters in every residual block are shared with other residual blocks, as seen in the image above.

As the graph shows, DRRN outperforms SRCNN, ESPCN, VDSR, and DRCN while having a comparable number of parameters.

Progressive Reconstruction Networks

CNNs generally give outputs in a single shot, but getting a high-resolution image with a big scale factor (say 8x) is a tough task for a neural network. To solve this, some network architectures increase the resolution of images in steps. Now, let’s discuss a few networks that follow this style.

LAPSRN

LAPSRN, or MS-LAPSRN, consists of a Laplacian pyramid structure that can upscale images to 2x, 4x, and 8x using a step-by-step approach.

As can be seen in the above figure, LAPSRN consists of multiple stages. The network consists of two branches: the Feature Extraction Branch and the Image Reconstruction Branch. Each iterative stage consists of a Feature Embedding Block and a feature Upsampling Block, as seen in the figure below. The input image is passed through a feature embedding layer to extract features in the low-resolution space, which is then upsampled using transpose convolution. The output learned is a residual image added to the interpolated input to get the high-resolution image. The output of the Feature Upsampling Block is also passed to the next stage, which is used for refining the high-resolution output of this stage and scaling it to the next level. Since lower-resolution outputs are used in refining further stages, shared learning helps the network perform better.

To reduce the network’s memory footprint, the parameters in Feature Embedding, Feature Upsampling, etc., are shared across the stages recursively.

Within the feature embedding block, an individual residual block consists of shared convolution parameters (shown in the figure above) to further reduce the number of parameters.

The authors argued that since each LR input can have multiple HR representations, an L2 loss function produces a smoothed output over all representations, making the images look sloppy. The Charbonnier loss function is used to deal with this, which can better handle outliers.

Multi-branch networks

We’ve observed a clear trend: deeper networks tend to produce better results. However, training these deeper networks can be challenging due to issues with information flow. Residual networks help to mitigate this problem by incorporating shortcut connections. Additionally, multi-branch networks enhance information flow by utilizing multiple branches, allowing information to circulate through different pathways. This results in the integration of information from various receptive fields, leading to improved training outcomes. Let’s explore some networks that utilize this technique.

CMSC

Like other super-resolution frameworks, the Cascaded Multi-Scale Cross-Network (CMSC) has a feature extraction layer, cascaded sub-nets, and a reconstruction layer–shown below.

The cascaded sub-network consists of two branches, as seen in (b). Each branch has different-sized filters, resulting in a different receptive field. Fusion of information from different receptive fields across the module results in better information flow. Multiple blocks of MSCs are stacked one after another to gradually decrease the difference between the output and HR image iteratively. The outputs from all the blocks are passed together to a reconstruction block to get the final HR output.

IDN

Information Distillation Network (IDN) is proposed to achieve fast and accurate results for super-resolution. Like other multi-branch networks, IDN utilizes the capability of multiple branches to improve the information flow in a deep network.

The IDN architecture consists of FBlock for feature extraction, multiple DBlocks, and RBlock for transposed convolution to achieve learned upscaling. The paper’s contribution is in the DBlock, which consists of two units: the Enhancement Unit and the Compression Unit.

The structure of the enhancement unit is illustrated in the figure above. The input is processed through three convolutional filters, each with a size of 3×3, and then divided into slices. One part of the slice is concatenated with the original input to create a shortcut connection to the final layer. The remaining slice is passed through another set of 3×3 convolutional filters. The final output is generated by summing both the inputs and the output from the final layer. This structure effectively captures both short-range and long-range information simultaneously.

The compression unit takes the output of the enhancement unit and passes it through a 1×1 convolutional filter to compress (or reduce) the number of channels.

Attention-Based Networks

The networks discussed so far give equal importance to all spatial locations and channels. However, selective attention to different regions in an image can yield much better results. We shall now discuss a few architectures that help achieve this.

SelNet

SelNet introduces an innovative Selection Unit at the end of convolutional blocks to help determine which information to selectively pass on. A Selection Module consists of a ReLU activation function, followed by a 1×1 convolution and a sigmoid gating mechanism. The Selection Unit is created by multiplying the Selection Module with an identity connection.

A sub-pixel layer, similar to ESPCN, is placed towards the network’s end to achieve learned upscaling. The network learns a residual high-resolution image, which is then added to the interpolated input to produce the final high-resolution image.

RCAN

Throughout this article, we have observed that deeper networks improve performance. To train deeper networks, Residual Channel Attention Networks (RCAN) suggest RIR modules with Channel attention.

Let’s discuss these more in detail.

The input in RCAN is passed through a single convolutional filter for feature extraction, which is then bypassed towards the final layer with a long skip connection. The long skip connection is added to carry the low-frequency signals from the LR image, while the main network (i.e., RIR) focuses on capturing the high-frequency information.

RIR consists of multiple RG blocks, each with a structure shown in the above figure. Each RG block has multiple RCAB modules and a skip connection, referred to as a short skip connection, to help transfer the low-frequency signal.

RCAB has a structure (as shown above) comprised of a GAP module to achieve channel attention, similar to the Squeeze and Excite blocks in SqueezeNet. The channel-wise attention is multiplied by the output from the sigmoid gating function of a convolutional block. This output is then added to the shortcut input connection to get the final output value of a RCAB block.

Generative Models

The networks discussed optimize the pixel difference between predicted and output HR images. Although this metric works fine, it is not ideal; humans don’t distinguish images by pixel difference but by perceptual quality. Generative models (or GANs) try to optimize the perceptual quality to produce images that are pleasant to the human eye. Finally, let’s take a look at a few GAN-related architectures.

SRGAN

SRGAN uses a GAN-based architecture to generate visually pleasing images. It uses the SRResnet network architecture as a backend and employs a multi-task loss to refine the results. The loss consists of three terms:

- MSE loss capturing pixel similarity

- Perceptual similarity loss, which is used to capture high-level information by using a deep network

- Adversarial loss from the discriminator

Although the results obtained had comparatively lower PSNR values, the model achieved more MOS, i.e., a better perceptual quality.

EnhanceNet

EnhanceNet uses a Fully Convolutional Network with residual learning, which employs an extra term in the loss function to capture finer texture information. In addition to the above described losses in SRGAN, a texture loss similar to the one in Style Transfer is employed to capture the finer texture information.

ESRGAN

ESRGAN improves on top of SRGAN by adding a relativistic discriminator. The advantage is that the network is trained not only to tell which image is true or fake but also to make real images look less real compared to the generated images, thus helping to fool the discriminator. Batch normalization in SRGAN has also been removed, and Dense Blocks (inspired by DenseNet) have been used to improve information flow. These Dense Blocks are called Residual-in-Residual Dense Block or RRDB.

Datasets

The following are some of the common datasets used to train super-resolution networks.

- DIV2K: 800 train, 100 validation, and 100 test. 2K resolution images are provided, including both high and low resolution images with 2x, 3x, and 4x downscaling factors. Proposed in the NTIRE17 challenge.

- Flickr2K: 2650 2K images from FLICKR.

- Waterloo: The Waterloo Exploration Database contains 4,744 pristine natural images and 94,880 distorted images created from them.

Loss Functions

In this section, we shall discuss various loss functions which can be used to train the networks.

- Pixel Loss: This is the most simple and common loss function used in training super-resolution networks. L2, L1, or some difference metric is used to evaluate the model. Training with pixel loss optimizes PSNR but doesn’t directly optimize the perceptual quality, and hence, it generates images that might not be pleasing to the human eye.

- Perceptual Loss: Perceptual loss tries to match the high-level features in a generated image with a given HR output image. This is achieved by taking a pre-trained network, like VGG, and using the difference of feature outputs between predicted and output images as a loss. This loss function is introduced in SRGAN.

- Charbonnier Loss: This function is used in LapSRN instead of the generic L2 loss. The results show that Charbonnier loss deals better with outliers and produces sharper images than those generated with L2 loss, which are generally smoother.

- Texture Loss: Introduced in EnhanceNet, this loss function tries to optimize the Gram matrix of feature outputs inspired by the Style Transfer loss function. It trains the network to capture the texture information in an HR image.

- Adversarial Loss: Used in all GAN-related architectures, adversarial loss helps fool the discriminator and generally produces images with better perceptual quality. ESRGAN adds an extra variant of this by using the relativistic discriminator, thus instructing the network not only to make fake images more real but also to make real images look more fake.

Metrics

In this section we shall discuss the various metrics used to compare the performance of various models.

- PSNR: Peak Signal to Noise Ratio is the most common technique used to determine the quality of results. It can be calculated directly from the MSE using the formula below, where L is the maximum pixel value possible (255 for an 8-bit image).

- SSIM: This metric is used to compare the perceptual quality of two images using the formula below, with the mean, variance, and correlation of both images.

Source

- MOS: Mean Opinion Score is a manual way to determine the results of a model, where humans are asked to rate an image between 0 and 5. The results are aggregated and the average result is used as a metric.

By this point you might be like:

Let’s code one of the popular architectures we have discussed so far, ESPCN.

inputs = keras.Input(shape=(None, None, 1))

conv1 = layers.Conv2D(64, 5, activation="tanh", padding="same")(inputs)

conv2 = layers.Conv2D(32, 3, activation="tanh", padding="same")(conv1)

conv3 = layers.Conv2D((upscale_factor*upscale_factor), 3, activation="sigmoid", padding="same")(conv2)

outputs = tf.nn.depth_to_space(conv3, upscale_factor, data_format='NHWC')

model = Model(inputs=inputs, outputs=outputs)

As we know, ESPCN consists of convolutional layers for feature extraction followed by sub-pixel convolution for upsampling. We are using the TensorFlow depth_to_space function to perform sub-pixel convolution.

def gen_dataset(filenames, scale):

# The model trains on 17x17 patches

crop_size_lr = 17

crop_size_hr = 17 * scale

for p in filenames:

image_decoded = cv2.imread("Training/DIV2K_train_HR/"+p.decode(), 3).astype(np.float32) / 255.0

imgYCC = cv2.cvtColor(image_decoded, cv2.COLOR_BGR2YCrCb)

cropped = imgYCC[0:(imgYCC.shape[0] - (imgYCC.shape[0] % scale)),

0:(imgYCC.shape[1] - (imgYCC.shape[1] % scale)), :]

lr = cv2.resize(cropped, (int(cropped.shape[1] / scale), int(cropped.shape[0] / scale)),

interpolation=cv2.INTER_CUBIC)

hr_y = imgYCC[:, :, 0]

lr_y = lr[:, :, 0]

numx = int(lr.shape[0] / crop_size_lr)

numy = int(lr.shape[1] / crop_size_lr)

for i in range(0, numx):

startx = i * crop_size_lr

endx = (i * crop_size_lr) + crop_size_lr

startx_hr = i * crop_size_hr

endx_hr = (i * crop_size_hr) + crop_size_hr

for j in range(0, numy):

starty = j * crop_size_lr

endy = (j * crop_size_lr) + crop_size_lr

starty_hr = j * crop_size_hr

endy_hr = (j * crop_size_hr) + crop_size_hr

crop_lr = lr_y[startx:endx, starty:endy]

crop_hr = hr_y[startx_hr:endx_hr, starty_hr:endy_hr]

hr = crop_hr.reshape((crop_size_hr, crop_size_hr, 1))

lr = crop_lr.reshape((crop_size_lr, crop_size_lr, 1))

yield lr, hr

We will be using the DIV2K dataset to train the model. We split the 2k resolution images into patches of 17×17 to provide model input for training. The authors convert RGB images to the YCrCb format and then upscale the Y channel input using ESPCN. The Cr and Cb channels are upscaled using bicubic interpolation, and all the upscaled channels are stitched together to get the final HR image. Thus, while training, we only need to provide the Y channel of the low-resolution data and the high-resolution images to the model.

FAQ’s

Q: ESRGAN vs Real-ESRGAN vs EDSR: which super-resolution model performs best in 2025?

A: Modern super-resolution model comparison for 2025 applications:

- Real-ESRGAN excels for real-world images with complex degradations, handles artifacts well, ideal for old photos and low-quality images.

- ESRGAN provides excellent perceptual quality for clean synthetic data, strong for anime/artwork upscaling.

- EDSR offers highest PSNR scores for benchmark datasets, good balance of quality and efficiency.

- Use case selection: Real-ESRGAN for practical applications, EDSR for research/benchmarking, specialized models for specific domains (anime, faces).

- Performance metrics: Consider both PSNR/SSIM (fidelity) and LPIPS (perceptual quality).

- Computational requirements: EDSR most efficient, Real-ESRGAN moderate, transformer-based models most demanding.

- Implementation: Pre-trained models widely available, fine-tuning possible for domain-specific applications.

Q: How to train custom super-resolution models for specific image domains?

A: Training domain-specific super-resolution models requires careful dataset preparation and architecture selection:

- Dataset creation - collect high-resolution images from target domain, create low-resolution pairs using realistic degradation models.

- Degradation modeling - implement blur kernels, noise patterns, compression artifacts matching real-world conditions.

- Architecture selection - start with proven architectures (ESRGAN, EDSR), modify for domain requirements.

- Loss functions - combine pixel loss (L1/L2), perceptual loss (VGG features), and adversarial loss for realistic results.

- Training strategy - pre-train on general datasets, fine-tune on domain data, use progressive training with increasing resolution.

- Data augmentation - rotation, flipping, color adjustments while maintaining degradation realism.

- Evaluation - use domain-specific metrics, human evaluation for perceptual quality assessment.

- Hyperparameter tuning - learning rate scheduling, loss weight balancing crucial for stable training.

- Implementation frameworks - PyTorch with specialized SR libraries, TensorFlow implementations available.

Q: What are the best practices for deploying super-resolution models in production?

A: Production deployment of super-resolution models involves optimization and infrastructure considerations:

- Model optimization - convert to optimized formats (TensorRT, ONNX), implement quantization (INT8) for faster inference.

- Batch processing - process multiple images simultaneously for better GPU utilization.

- Memory management - implement tiling for large images, efficient memory allocation strategies.

- API design - RESTful endpoints with proper error handling, rate limiting, authentication.

- Scaling strategies - horizontal scaling with load balancers, auto-scaling based on demand.

- Quality control - input validation, output quality checks, fallback mechanisms for failures.

- Monitoring - track inference time, memory usage, model accuracy metrics.

- Caching - implement intelligent caching for repeated requests, consider edge caching for global applications.

- Hardware optimization - GPU acceleration essential, consider specialized inference hardware.

- User experience - progress indicators for long-running tasks, preview generation for immediate feedback.

Q: How does super-resolution performance vary with different upscaling factors (2x, 4x, 8x)?

A: Super-resolution performance degrades with increasing upscaling factors:

- 2x upscaling achieves highest quality with PSNR >30dB common, most details preserved, fast inference times.

- 4x upscaling represents sweet spot for practical applications, good quality-efficiency balance, PSNR 25-30dB typical.

- 8x upscaling more challenging with PSNR <25dB, requires careful model design and training strategies.

- Quality degradation - higher factors introduce more artifacts, hallucinated details, reduced fidelity to original content.

- Computational scaling - inference time increases quadratically with output resolution, memory usage grows significantly.

- Architecture adaptations - progressive upscaling (2x→2x) sometimes better than direct 4x, attention mechanisms more important for high factors.

- Training considerations - larger receptive fields needed for high factors, more training data required, longer convergence times.

- Application matching - choose factor based on quality requirements, available compute resources, acceptable inference times.

Q: What are the emerging applications of super-resolution technology in 2025?

A: Super-resolution applications continue expanding across industries:

- Medical imaging - enhancing MRI, CT scans for better diagnosis, reducing scan times while maintaining quality.

- Satellite imagery - improving resolution for agriculture monitoring, urban planning, disaster response.

- Video streaming - real-time upscaling for bandwidth optimization, enhancing legacy content for modern displays.

- Gaming - real-time super-resolution (DLSS-style) for better performance, enhancing older games for current hardware.

- Security and surveillance - improving camera footage quality for identification, license plate recognition.

- Cultural preservation - restoring historical photos and documents, digitizing art and manuscripts.

- Mobile photography - computational photography for smartphone cameras, enhancing low-light performance.

- Scientific imaging - astronomy, microscopy applications requiring detail enhancement.

- AR/VR - foveated rendering with super-resolution for efficient high-resolution displays.

- Automotive - enhancing camera feeds for autonomous vehicles, improving safety systems.

Conclusion

In this article, we explored the fundamentals of image super-resolution, its real-world applications, and the taxonomy of popular algorithms, highlighting their respective strengths and limitations. We also covered commonly used datasets, loss functions, and evaluation metrics to help you get started with your own super-resolution experiments. To bring theory into practice, we implemented the Efficient Sub-Pixel Convolutional Neural Network (ESPCN) architecture—a lightweight yet powerful model for real-time image enhancement.

Whether you’re building AI applications in healthcare, surveillance, or content generation, super-resolution can significantly enhance visual clarity and performance.

If you’re looking to accelerate your deep learning workflows, DigitalOcean’s GPU-optimized Droplets offer a fast, scalable, and cost-effective solution. You can also deploy models using our 1-Click AI App Marketplace to test and run models like ESPCN without complex setup.

Super-resolution is a computationally intensive task, and leveraging DigitalOcean’s cloud GPU infrastructure allows you to train and deploy models efficiently—so you can focus on building, not managing infrastructure.

Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.

About the author

With a strong background in data science and over six years of experience, I am passionate about creating in-depth content on technologies. Currently focused on AI, machine learning, and GPU computing, working on topics ranging from deep learning frameworks to optimizing GPU-based workloads.

Still looking for an answer?

This textbox defaults to using Markdown to format your answer.

You can type !ref in this text area to quickly search our full set of tutorials, documentation & marketplace offerings and insert the link!

- Table of contents

- Prerequisites

- Image Super-Resolution

- Super-Resolution Methods and Techniques

- Datasets

- Loss Functions

- Metrics

- FAQ's

- Conclusion

- Resources

Limited Time: Introductory GPU Droplet pricing.

Get simple AI infrastructure starting at $2.99/GPU/hr on-demand. Try GPU Droplets now!

Become a contributor for community

Get paid to write technical tutorials and select a tech-focused charity to receive a matching donation.

DigitalOcean Documentation

Full documentation for every DigitalOcean product.

Resources for startups and AI-native businesses

The Wave has everything you need to know about building a business, from raising funding to marketing your product.

Get our newsletter

Stay up to date by signing up for DigitalOcean’s Infrastructure as a Newsletter.

New accounts only. By submitting your email you agree to our Privacy Policy

The developer cloud

Scale up as you grow — whether you're running one virtual machine or ten thousand.

Get started for free

Sign up and get $200 in credit for your first 60 days with DigitalOcean.*

*This promotional offer applies to new accounts only.